Touching the future – ISMAR & AWE in Munich (1)

Hey everbody,

in October we had the great double trouble week in Munich, hosting both – ISMAR and AWE EU – for our pleasure. I’ve been there the whole week checking out the latest demos, papers, talks and companies. After some aftermath sorting of things it’s about time to point out some highlights. Coincidental, the AWE team just published their videos today. So you can check out all their talks recorded presentations on their youtube channel.

Talking about videos, ISMAR created a new playlist for 2018 presentations, though the list is not complete. In any case, worth a look, too.

Touching the future

Let’s take a look at ISMAR. While AWE is more a B2B and products-focused shake-hands, the ISMAR symposium is the research laboratory where universities, labs or company R&D meet to look more ahead. Today common place algorithms like SLAM or depth camera usage you would typically see way ahead of consumer-readiness here at ISMAR. Besides panels and paper presentations there is always a demo area. Today, let’s focus on that one! Demos are always more tangible than a paper. ;-) Speaking about it… during this ISMAR I felt a heavy focus on interfaces and how to approach this in a mixed reality space. It is still an urgent (and rather unsolved) issue and we could see many demos and talks on it.

Definitely a lot of effort flows into gestures and mid-air touch research. E.g. with the paper “Mid-air Fingertip-Based User Interaction in Mixed Reality“, the “A Fingertip Gestural User Interface without Depth Data for Mixed Reality” or the “Seamless bare-hand interaction in Mixed Reality“. But as usual, mid-air gestures and finger clicks don’t offer force feedback. No control, no feedback, no vibration or other substitute alert. It can also get annoying on longer sessions with raised arms or when false-positives are registered or gestures are not detected. I like gestures, but while we wait, I was very pleased with some other tangible demos:

Making use of the real world

E.g. I saw nice combinations of AR-HMDs with smartphones or smartwatches. One smartphone demo presented was titled “Enlarging a Smartphone with AR to Create a Handheld VESAD” (= Virtually Extended Screen-Aligned Display). The video shows the idea. It’s a smart idea to get haptic feedback, integrate existing devices into the landscape and profit from its advantages. Maybe sometimes it’s more convenient to use a small physical screen? Sometimes you take your (future AR) glasses off, but stay in the same MR data space… The demos shows a prototype on how to integrate the tracked smartphone and extend the screen space, that is not pinned to a wall, but dynamically moving at your will (in your hand):

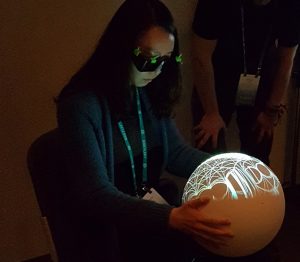

The smartwatch approach was also very intriguing! Titled “swag demo: smart watch assisted gesture interaction for MR HMDs” from UVRLAB. Use the wrist-attached device for tracking, but also as a haptical touch input and additional sensor (heartrate, gyros to support hand tracking). Unfortunately, there is no video available. But what caught me by surprise was another demo, titled “a low-latency, high-precision handheld perspective corrected display“. Not the sexiest name, but an awesome demo I didn’t expect when looking at it from the outside (foto on the right).

The idea is to do a dynamic projection-based augmented reality on moving targets. Currently it’s still a bit of a hardware overkill for their outside-in tracking, but it allows you to grab a white cone or a larger white sphere and move it freely in your hand (and inside the tracking volume and projector range). You put on 3D shutter glasses and get your AR content cast directly into your hands with correct perspective!

It was surprisingly elegant and beautiful. Different demos were shown. Including a simple 3D model observation (that rests inside the seemingly transparent sphere), a jenga tower game and a marble maze game, where you had to move the marble over thin 3D curved bridges by tilting the sphere (with the game simulating real world gravity and physics). These demos presented the advantage of the system nicely: you get very fine and natural control over interaction with the object. Not only changing the perspective towards the object, but also for manipulation holding the tool in your own hands. An older video shows their concept:

This reminded me again of how powerful non-wearable AR can be! You are free of glasses or other bulky hardware on your body and just enjoy added digital 3D value in your environment. I could imagine this “crystal ball” in my living room or children’s toybox. The sensation was really impressive and definitely worth to follow their further research!

All right, that’s a quick update for today. To pass the time, you should check out the above videos!

Cheers!