Yet another Magic Leap One hands-on: my two fairy dust cents.

An Introduction to Magical Worlds

Finally, I had the chance to try out the Magic Leap One. Damn, it`s about time! Or would it have been better to wait longer – for the Magic Leap Two or for further software updates? Find out today in my two cents on the leap discussion.

So, last days I`ve been visiting the German company Holo-Light. They have a ML1 at hand already and I got the chance to try it out there. Holo-Light is a software developer and service provider all focused on augmented reality. I`ve been reporting on their Holo-Math and HoloLens stylus last year during AWE. So, thanks, Holo-Light for having me! See you again next month!

Probably you have read other reviews by now, like the one by Palmer Luckey or from tested. So, I’ll leave out specs, keep things short and focus on my sometimes different view on things and where I see the potential. Let’s a go!

Putting it on – My First Impression

Holding up the ML1 it felt lightweight and made with quality. Nothing cracking. I liked the strap-on headband mechanism a lot. People rant that it is not as stable as the HoloLens or that you need longer to find your sweet spot. I felt different. I think the advantage here is that you just push the goggles up as far as possible on your nose towards your forehead until it touches your skin and blocks the peripheral view of your eyes. This was easy and without hassle (for my head shape). I love this easy on-and-off mode for demo booths. HoloLens can be more annoying. But this comes with the trade-off of sweating… The pressure on your forehead and the bigger surface touching your face was more and more uncomfortable after a while. Then again, HoloLens would win with only a simple nose-clip touching you (also easier to clean during tradeshows than full foamy cushions inside). So, between a rock and a hard place here. No winner…

With my B2B tradeshow viewpoint I didn’t like the pocket-computer (the lightpack) too much, since it is another device to clip on users including annoying (thick) cables going up where I got tangled up in. But after a while using it, the lightpack was forgotten and the little weight on my head sure was a relief (no surprises here). If only the cables where a bit longer (I’m almost 2 meter) and thinner to be more flexible. Form factor of the disc was fine, though sometimes I wished for an included holster where to leave the controller.

Field of View and Image Quality

Let’s start with image quality. I was really surprised. Loved the sharpness, brightness and colors of the ML1. Some things are due to their tricks, but some you can see already the evolution of hardware since release of other devices 2 years+ in the past. The darker shaded visors helped with the contrast and gave a bit more of an immersive VR feeling, but in a bright office it just felt right with this mix.

So, the field of view. Eternal problem-child of MR glasses… you know it by now: Magic Leap raised the field of view a bit compared to HoloLens but restricts your real world field of view with the thick frame of the goggles. There is no open fade-over to e.g. the lower end (like HoloLens or Meta). This sure helps to trick the user in believing “they finally managed to fill the full field of view with augmented 3D content”, but for grabbing my coffee cup or not bumping into chairs and tables while walking it’s rather bad. The bottom bar was really the worst for me. For example, during conversations – me standing – with seated people at a typical distance I won’t see them at all. It really isolates you more and for a certified worker safety a no-go.

Natural Tracking and Interaction?

The 6-DOF tracking of the glasses worked better than expected for me. It was used in an office during daylight, but with blinds rather closed. No direct sunlight. The walls and floor had enough details (paintings, flowers, tables) to generate a stable mesh and it was sometimes a bit off jittering, but overall it felt at eye level with others. So, let’s look at the clicker controller. It claims to be 6-DOF and here typically the ranting starts, because it fails on accuracy. I agree that a Polhemus or a Vive tracker is way better, but for simple mid-air actions in interfaces I had no issues. I even used the painting function in Creator to paint all edges of a table to check accuracy and stability of tracking afterwards. It didn’t let me down on a “few centimetres shaky” tracking level. Maybe after reviews there was nothing left to lose and I was positively surprised this way. The question remains: what do you want to do with it? Are you painting in mid-air in 3D? This worked fine on a coarse level. I could jump around like a floor exercise dancer during the Olympics painting ribbons in air, but I couldn’t pinpoint welding positions at a millimetre level to help out automotive companies (like metaio did with the Faro arm). For accurate tasks a no-go, but I couldn’t help but liking the idea. We are used to 6-DOF controllers in VR, but not in AR. Companies still fail with voice control, easy gestures and simple controllers. Having a first fully tracked controller in AR was a good feeling. Not done, but a good start!

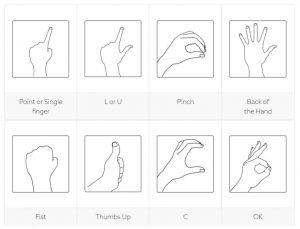

So, why still go for a controller instead of hand tracking like Project North Star, i.e. Leap Motion? On one hand it is for sure the technical limitations, but on the other hand for more natural interaction. What? Is a controller more natural? Sometimes it sure is. We are used to using tools in our hands. The extra haptic feedback and buttons allow more complex interactions and a more natural feedback. It might be an interim solution with the badly tracked controller, but I do sometimes miss it now running my HoloLens. Gestures on the other side should be the way to go? Not always, not for special tasks. But during “daily business” a fully blown gesture interaction must do the trick for AR glasses I wear all the time. Unfortunately, Magic Leap did not deliver in this field. Seems like time was too short. The demo application to check if gestures work (see image), was fine. But all menus were interacted with by the controller and only the Sigur Ros demo allowed a bit of dreamy hand interaction. It felt bad, that ML didn’t take the time to thoroughly think the interface design through based on their gestures! This should be in the tool set with guidelines for all developers and a standard. But it felt more like “oh, gestures we do have, too, look!”.

Speaking of looking… the eye tracking was there. But also felt “not done”. There was not a single demo where I could really enjoy change of focus of the two distances (you already know that it only ships with 2 layers of waveguides, right?). I placed a lot of virtual objects at different positions in Creator and changed focussed between near and far objects. Bad news: the switch happened with a delay of 2-3 seconds and also the color representation changed a lot (bad calibration between the two layers?). The good news: the defocused zones felt realistic and gave a nice touch to the AR experience. It didn’t reach the quality of Avegant (I tried last year), but it’s worth integrating… if it works. Which it didn’t due to mentioned delay. There is no use at all if you can notice the change. It must be so quickly, that you cannot notice it. Will a software update help? Or are the eye tracking cameras plus computation just too heavy on the device? I really had high hopes here and was crushed.

Reconstruction and Meshing

The reconstruction of my space felt quite swifty and detailed. It also felt to go further in distance than others. I didn’t take the time to measure it, but updates on holes inside the mesh were fast and borders, edges and details are lost the same way as with others. No real good news here. But the worst part lies again on software side: apps like Creator force you to scan your environment during start-up. But this mesh is lost when you re-open the very same app. Also, other apps don’t seem to have access to the same mesh. The main menu doesn’t place menu items in your space, but lets them just float. Even during usage of Creator it lost my space: after successful initial scan I left the room, waited briefly and came back. The app forced me to rescan all instead of recognizing the space. Again, operating software seems beta here. Too bad. Nothing to be seen on automatic closing of gaps, better edge detections, etc.

Speaking of software… what to do?

The glasses still need to find their USP. B2B scenarios in rough environments seem impossible, artistic demos seems to be the way to go for Magic Leap to avoid too heavy conflicts with competitors and to have less strict KPIs on their system. All looks nice in a fun cartoony way and you can enjoy using it, while forgetting about a bit worse tracking, less accuracy and work safety with limited field of view. The extra visual quality helps a lot in demos like Sigur Ros and was a great trippy feeling when your environment starts to live and you dive into this mixed reality. You can see the device shine here. All is floating (to hide tracking problems while everybody will state it is for a more immersive dream landscape) and jellyfish reactions to your hands is slowly. Good fun. The restricted field of view helps here for a change to dive into it. Magic Leap One is a carnival device. The only other really polished app was the before-mentioned Creator. The limited items here already gave a great idea of a mixed reality, though: placing tracks in mid-air, marbles that roll with simulated physics, dinos that fall from tables… a great fun tool for kids (and us) to play and learn. I’d love to see the old physics game The Incredible Machine on it, please! Anybody? So, software-side was really slim and beta, not much to do. Even the browser didn’t feel well thought out. Scrolling, interacting and esp. URL typing with the controller was a hassle. Gestures nowhere to see. Too bad… You get impressed by two demos, but then the fun is over.

Holy Grail or all Garbage?

So, getting to my conclusion. I was positively surprised having read other reviews before trying. But from a point of view from “before release” I’m totally crushed. All of us expected the holy grail of AR. But this totally failed. Overhyped, period. Looking only at the available tech in year 2018: it is a valuable step forward with some good and some bad decisions made. Downs and ups in short:

Most disappointing was the wave guide display with only two layers. But even worse was the bad eye tracking, that is not useable. With this, even one layer of waveguides would have been enough. Second disappointment was the missing use of gestures throughout the system and no clear UI guideline or concept. Next, the software really felt not ready. Missing storage and sharing of the high-res spatial mapping, no clear interface design, nothing available as app store or social hub… Did ML have too much pressure in the end to publish? For sure. The software development probably needs more than another year to get up to speed. This surely is at HoloLens level three years ago or earlier. Infrastructure baby steps… that need to be done. Software updates can hopefully optimize tracking, too.

On the flipside I liked the visual quality, to have a 6-DOF controller (mark 1…) and in the end the disc computer. If they fix the details, adjust the cable and the headgear blinders, I’m happy with this approach. Others like DAQRI do it, too: less heat and weight on your head surpass the disadvantage of a clip-on-belt thingy. I can imagine a lot of fun demos with ML1 already. Things just need to get rolling. With all their money I was expecting more content pre-installed. But possibilities are there! The added graphics power and the controller allow for more (and other) experiences tracked in full space. It feels more immersive (or disconnected) than other AR glasses. This could be used to their advantage.

Verdict

Well, buy or burn? For playing around and showing off fun graphics-heavy demos it’s worth it if you have the money left in your budget. But for real tasks in your B2B context it’s still too early. Missing software and toolsets make it still harder for developers. With the Leap One the hype was not justified at all. Plus the silly looking steam-punk outfit you opt for when wearing. For industry usage apart from marketing, it’s not there yet.

But it is an important move on the market and the AR landscape. After all, the ML1 seems to me like the first real competitor to Microsoft. Other devices suffer from connected PCs, less spatial tracking, even less FOV, etc. We now have nothing magical, but a good step forward to push competition. I’m really hoping for HoloLens 2 in 2019 with bigger field of view, focus swap and fully integrated gestures that allow more, e.g. why not interaction with real objects – recognized by machine learning? With some fairy dust spread, you can dream up a lot of things, right? Finally, after everybody being disappointed for a while, the pressure on ML is gone and everybody can get back to business… let’s hope for a lot of software updates soon and aim for bigger things in 2019! Things are getting more vivid now with more players! That is the best ML could give us for now.

Cheers,

TOBY.