Testing Lightfield AR with Avegant (AWE Part 3)

Today I’d like to continue with my impressions and live demos from Augmented World Expo in Munich. Last time I wrote about the Meta glasses. The big field of view let`s you forget the window and the gesture concept can be a great step towards more natural human machine interaction. I finished talking about problems of today’s augmented reality glasses and the “floating screen feeling at a fixed distance” with the vergence accommodation conflict. Is there a solution yet? Time to look at lightfield displays from Avegant.

The problem of today’s AR and VR glasses

To go there, let me detour briefly. Almost all commercially available smartglasses today cast one or two screens in front of your eyes. These have a fixed perceived distance and demand your eyes to focus there – like if you were holding up a piece of paper at armlength. This works fine for virtual objects at that position. But what happens when you have an item far out? In the real world, your eyes would adjust focus and vergence: both eyes would turn slightly outwards going more parallel while focusing at the same distance automatically. This is the natural way. Monocular accommodation (focus), binocular vergence plus the brain-part of data analysis (image disparity and blur) all work in concert and harmony automatically for the real world…

But this breaks with HMD screens as of today. If the virtual object is far out, your eyes still need to focus on the virtual screen distance nearby at armlength, breaking the automatism of your eye resulting in the vergence-accommodation conflict (VAC). It might hurt, lead to headaches or be exhausting. Some people are more affected – like when going to 3D movies.

But it`s not only about comfort – it just does not look natural if all objects spread out are crisp sharp. If you really want to integrate augmented objects seamlessly, you need even more than this realistic distance feeling. You need to defocus objects like your physical eyes do with real objects all the time! This is a key feature for an immersive, integrated sensation of augmented objects.

The lightfield tech promise

Magic Leap, Avegant and others claim to have the solution to bring us displays that let us focus and defocus naturally on virtual objects that works in harmony with real world items.

We don’t really know yet what Magic Leap is doing, though there are signs and rumors. I’d like to mention Vance Vids videos here and Karl Guttag’s observations on ML tech. Worth to read and watch. – But we do know that others, like Avegant, use lightfield technology and waveguide optics to render multiple perspectives of virtual objects and directly beam them into your eyes. It takes more optical and general hardware engineering to miniaturize all this and it takes more computational power to calculate multiple perspectives, layers or slices of your 3D objects in real-time. Each image with a slightly different perspective. All will be cast to your eyes and the optics do the magic trick letting your eyes pick the right ones. But how many slices are needed and won’t it kill resolution of today’s devices? Is it possible to render all this in real-time at all?

Nobody tends to explain their magic tricks and during my interview with Avegant they wouldn’t either. We do get an idea when we look at research projects from the past, though. Stanford’s Gordon Wetzstein talks about 25 images per eye per frame (a 5×5 grid). Nvidia showed an earlier lightfield prototype with 14×7 perspectives in total. How much do we need?

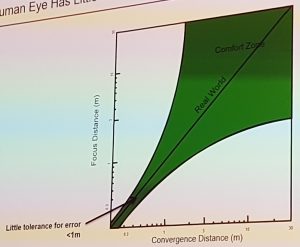

During an interview with UploadVR, Avegant’s CTO Edward Tang stressed the idea, that you would really need more spatial resolution in close proximity, since the human eye has little room for error close by (see graphic from Avegant). He mentions 20 layers near-by, but far out even one might be enough to work well. Hence, 21 layers could do the trick? Maybe a 5×5 grid is not such a bad idea as nvidia presented then. But enough on theory, how does the resulting image look like? Can Avegant’s display live up to the hype?

Live Demo with Avegant

The live demo was shown in a rather dark room. The wired headset felt a bit bulky and big, tracking was OK (using HTC Vive’s lighthouse system), but Avegant restated that they only combine 3rd party elements to show a demo. After all, they only focus on their display technology. Allright, let’s take a look.

During the demo tour I could experience some female avatar, rendered in high quality, with details up to a single hair. Brightness and sharpness were really great. No screendoor effect could be seen, i.e. no pixel grid was visible. The field of view felt good, but still objects were cut off. But the interesting part was best observed with the planetary system demo, where multiple planets drifted in mid-air and I could move up closely to the Earth’s moon and make out all details and craters on the surface. Focusing on the moon made the other planet’s in the back blurry naturally. Changing focus to the planet’s in the back, the moon turned blurry as you would expect in the real world! This was truly amazing! I could not make out any jumps or layers. Well done! The switch of focus, the feeling of convergence and accommodation felt natural to me – and I’ve been playing around with stereoscopic vision devices a lot in the past. I’ve hold my finger in front of my head for cross-eyed view a million times. During this demo setup it felt fine!

My Conclusion and what’s next?

The demo was presented very well and I was stunned by the optical illusion that worked so impressively. Unfortunately, there was only little time. So, a longer test could have revealed more. How does the setup work under normal light conditions, daylight, today? How does the focus feel when rendering virtual objects next to (the same) real objects? Will it still feel natural? Is the focus/defocus accurate? Is eye fatigue really minimized or gone with this display tech? We will have to wait and see another time.

But the prototype demo showed a big step toward immersive augmented reality displays. A huge problem solved – or at least tackled in a version 1 going onwards from there. If future designs can be slim and battery-lasting, this sure seems to be a must-have for everyday glasses!

Once, all these issues are solved, we can focus on the next problems (sigh), e.g. a complete world-scan and shared 3D representation for city-scale tracked AR experiences and an easier content sharing with 5G, 6G on the go. Google just pushed out their content platform Poly, … maybe there is more to come for user-generated world scans, too? Well, let’s keep that for another day.

OK, hope you enjoyed my insights. I also tried to summarize the VAC, lightfield, etc. in a simple way. Any thoughts, feedback or what would you like to see covered in detail? Let me know! Cheers!