Meta Hands-On and more on AR glasses (AWE Part 2)

During AWE EU in Munich, Ryan Pamplin was giving a talk on the Meta 2 and spatial computing. I had the chance to schedule a private demo session beforehand and will today share my impressions from the presentation and my live demo. Is the Meta worth waiting? What other glasses might do the trick and what`s next?

The On-Stage Demo and Meta news

During the talk Ryan presented the status quo of Meta, the upcoming news and gave a quick tour and live demo (see my video below). If you haven`t seen a Meta demo, make sure to watch the 7 minutes show to get the idea. For the ones who attended AWE US or other this year`s events, there was nothing new to be seen, though.

You don`t know the Meta? It´s a fully fletched augmented reality headset with 90° field of view, full room-scale 6-DOF head tracking to move as long as the wire lasts, a 2.5k resolution with promised 60 fps. The tech behind is similar to the competitors (first to name Microsoft) using two monoscopic fisheye cameras with a full 270° field of view, supported by two 6-axis IMUs. The perfect Hololens counterpart, you would say! Read again… there still is a wire attached…

This obviously makes the device more desktop-bound, their marketing is heading this direction, too: replace all your screens by virtual ones, set up virtual decoration, etc.! Ryan states, he already threw away his screens in the office! One thing is for certain: the graphics look really great – as you would expect from a dedicated GPU coming from a wired PC.

During the talk we learnt about their plans to ship a two-user-collaboration feature in Q2 2018 – a pretty useful thing if you want to “work as usual” but have no more screens to look at together. Too bad it`s not there yet.

The Meta now supports OSVR and WebVR with Firefox and he lists A-Frame, Sketchfab or Dance.Tonite as examples. Surprisingly, SteamVR is now also supported. One might ask how you could play today`s VR experiences on a AR headset with gestures input. I don`t see the point (yet), would have made more sense to support Windows Mixed Reality, although the platform battle might start soon and closed ecosystems are sure to emerge more (and annoy). Ryan talked about 2018 “being the year of the killer apps” – can´t wait and please surprise me! There sure is enough to explore and great use cases out there. To pass the time (waiting for your almost-shipped order) it`s worth looking into the spatial interface principals Ryan mentioned. OK, without further ado the stage demo given during AWE EU:

My Hands-On Experience

Then it was time for my own live demo. Glad it worked out and greetings to really warm-welcoming Ryan for the tour he gave me! Nice guy, greetings! OK, so, dear reader, you`ve seen the specs, you´ve seen the head-strap and lens setup and how it looks like, so let´s cut to the chase: let`s put it on. To me, the headset felt a bit slippery and I could imagine that it gets sweaty on the forehead after a while, but overall weight distribution felt fine and comfy. A nice detail are the magnetically attached pads you can easily exchange to find the best fit or for hygiene reasons (also swapping for a smaller one will give you a bit more field of view moving closer onto the screens).

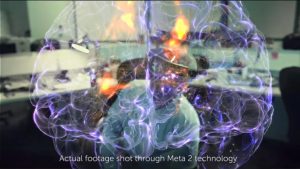

The demo shown uses a regular Windows 10 system using Unity. After an initial room scan I could give it a go myself: first thought, the field of view is pretty big and augmented content is barely cut off. The promise is kept. Brightness is pretty great as well (only tested indoors in a normal lit room). All looked very crisp and colorful. The rendering power from an attached PC sure pays off! All content shown (the same as in the video above) looked very detailed and subjectively surpasses the Hololens demos I´ve tried so far. The brain demo or the eyeball look really great in detail and give me a great AR workspace feeling.

Hmm, but then we have to talk about the bad things. The overall 6-DOF tracking was really shaky and no virtual object or screen really stuck to its place. The room (as shown) had some glassy walls, but I’d say really enough details and structure to detect features. You could expect that SLAM is well understood, but it seems like the devil is in the details. A virtual screen or my virtual flowers or decoration in my room that always shake – even if it only is a little bit – I could not work with it. When resting (not breathing) you wouldn’t really notice the tracking issues, but when turning your head pinned windows would swim or objects put to the floor jitter by a few up to maybe 10-15 centimeters on close proximity.

My brain could filter it out? Maybe, but I expect more of an AR headset devkit in 2017. Maybe it was caused by a rushed setup as Ryan states, but without the intent of being mean, I doubt it. I would take my time for a perfect setup. To be even meaner: I expect it to “just work” in any office environment that is not plain white or pitch black.

Gesture recognition was the next big thing I was hoping for to try. Every time I run a demo with other glasses, first time users tend to grab in mid-air to touch and manipulate objects. The missing piece – as also provided by Meta – would be so awesome. I believe that the engineers spent a lot of time on a well thought out UX design and it has huge potential. But again, it didn`t work out of the box. Ideally (= a consumer product) this would work without training. In reality it often failed in the beginning, but I got used to it after a while, getting a hold of it pinching the air. Zooming objects with both hands is good fun, although I really had to repeat multiple times for it to work. The occlusion gives you a depth idea, but is not that accurate to help with the interface controls naturally. Hm, maybe Meta saved some money or algorithms or sensor hardware: hand recognition only worked stable after removing my shirt`s sleeve – does it work with all skin tones? Maybe it could be an idea to copycat the Leap Motion approach that is working way better. Maybe a long-term demo would let me learn and accept the current status (it`s a first gen dev kit after all), but that day reality couldn`t live up to the marketing promises and demos seen before. To me, it didn’t feel ready for shipping yet.

To sum it up

I really like the overall approach, the great visual quality and field of view. Occlusion handling for gesturing hands is a great detail and if software updates can improve this and the overall tracking – I`m happy to follow. A wireless version and more cool stuff like object recognition will come… but we don`t know when. If you need to do a “real” AR glasses demo, I would for now, stick with the competitor(s). If you have your own research department, some time to spend and the belief in software updates – you could go for one to enjoy the view, but don’t throw away your screens yet!

How to proceed?

The AWE showed a variety of smart glasses as mentioned last time. While Hololens currently leads the race for complex 6-DOF inside-out stand-alone solutions, there is a number of “assistant reality” glasses that allow for special uses cases. If a HUD is sufficient for your picking task you might go with some Vuzix, Epson or Trivisio (or even Google Glass). If you need a slim design, I still like the ODGs a lot, if you have a rugged environment check the RealWear designs or the DAQRI helmet (for me it feels too heavy, but they have the cool thermal vision USP). So, I´ve just mentioned a few, but you get the drift. It might be sufficient to go for a less-immersive slim setup for your B2B task.

The Hololens shows us great tracking, the Meta a great visual quality and field of view. Gestures we can see coming. So, well, but how to proceed and what will be the next big step to take? For really immersive and stunning AR we still need to get rid of the “floating screen feeling at a fixed distance” with the vergence accommodation conflict solved. Yeah, you know what`s coming… my review of Avegant lightfield`s up next. Be right back, cheerio!