AWE in Munich: “after 7 minutes people accept holograms as being real”

From flat ware to spatial ware

Augmented World Expo came to an end last Friday and it`s time to get back to the desk and digest the latest info. There were some really great talks and innovative ideas to see. We were not disappointed and could see once more three beautiful Auggie awards at the end and it was pretty packed. There were some other VR highlights to try out – like the high-res Varjo VR-2 Pro with great finger tracking from Ultraleap. I finally got the chance to try out the HoloLens 2 (including updated VisionLib object tracking) and the fresh and “under construction” Lenovo A6 AR glasses. But today, I want to start with an overview on the event, talks and some other highlights.

To kick things off, Ori entered the show and talked about the discrepancy we live every day: for 50 years 3D content has been viewed on 2D screens and AWE is on its mission to help the arrival of spatial computing: “we are spatial and so should computing”. Since the early days of computing we were bound to flat output devices. Obviously it was and is a tech problem, everybody would want more, quoting Tom Furness with the words “we all had our dreams, but tech wasn’t there”. Interesting thought from Ori to set the stage: the origin of the word “screen” actually states to block something. Wiktionary defines “a physical divider intended to block an area” and the German word for screen “Bildschirm”, derived from “Schirm” also signifies to block something (rain, people, sun). We are seperated from other people, our data, any content. Time to break up this force field and dive right into the middle of our data. Spatial Computing becomes the tag line for all during AWE: get information on-location in real-time and make it accessible in a best way to us as human beings. Not adopt us to neck-pain-causing screen surrogates. Let’s go spatial and make the world the medium! (Let’s go spatial wikipedia!)

Winners: The Auggie Awards

Talking about going spatial. The three awards were given out on the second day. The “startup to watch” award was won by Spatial First. As “Best in Show VR” ManusVR won the race. I tried their latest demo (a turbine maintenance training wih their haptic gloves) and it was pretty neat and well done. Still, it’s a bulky setup with its drawbacks and limitations. Well, but I don’t want to start a discussion on finger tracking versus gloves here (not today). Congratulations also go out to Re’flekt for “Best in Show AR”. If you miss out on one of these three companies: time to update and check their work.

Anniversaries: AWE and a one-year old

Congratulations to AWE on the big 1-0! 10 years of AWE and the 4th year in Europe. A new born arrived during AWE 2018 with OpenArCloud.

They also had a session to present the work from last year and the plans. I do support their approach and it’s a great initiative to try to hold something open up against the walled gardens from the big players. What’s the plan? To create a spatial computing platform (OSCP) with a reference implementation. This should on the long run create a digital twin of the real world to use for any AR scenarios. With these definitions – in parallel to an open web architecture for flat land – we must try to keep an open and interoperable system as the base that is non-commercial per se. Companies are able to implement their version of the stack, but it should not be a close source from one player.

OpenARCloud is currently reviewing their draft on the standard for their GeoPose locators (voted on October, 31st) and plans to have working viable version of the OSCP until the end of 2020. Not only for humans with their phones or glasses but also for cars, robots and any other crazy IoT 5G gadgets.

If you also support this cause, feel free to join them!

Talks and Dev Tracks

As usual there is the center stage for the bigger players and panel discussions and parallel dev tracks for the nerdy part of things. I typically prefer the smaller sessions to avoid too much marketing speech. But the above title quote comes from a great discussion during the session with Bosch, Reflekt and Microsoft: 7 minutes to make people believe the objects are real. I can totally relate that in a well-designed AR case (not even talking about visual quality) this will happen. Especially with solid tracking and natural interaction (hello HoloLens 2!). Good advice from industry players along the way, including to focus on one problem at a time – AR just cannot solve everything at once. Sometimes buzz-word-trapped managers try to solve everything by adding more AR to it. But that’s just not how it works. Obviously, there are still many restrictions to the tech (coming back to Tom Furness).

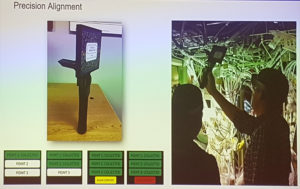

But AR can make a real difference in today’s B2B processes. Boeing showed a great example of their “BARK” system with AR-guided wiring and part installation in their aircrafts. The HoloLens-based solution actually solves their problems and speeds up the process. But even here tricks needs to be done. An interesting piece: they use a tracked physical pointer tool to get the right 3D position done for the HoloLens overlay. Even the big players need to get back to manual 3-point initialization with a marker-based tracker. Funny, but just best practice and it get’s the job done.

Besides other interesting talks, e.g. Admix on dynamically placed ads in VR (oh no!) there were also quite disappointing ones like the one with Greg Ivanov from Google: they just showed all known information and their “crazy” vision that more understanding of the scene is needed to go further. Yawn. Better to jump to other sessions (we can hopefully see again on youtube sooner or later), like the talk from Daniel Seiler from AUCTA.io on “the way AR might change how we think”, which I recommened beforehand:

Sometimes it’s good to take a step back and think about the new tech before rushing into things. People often don’t see negative consequences on innovations, but only the benefits. Software is starting to eat our world and our skills. AR can lead to more productivity, but people might not remember anymore and become dumb peasants. How is the calculation for humans? E.g. how much energy do I invest to memrize something in contrast to looking up the information? Do I remember my way or do I take out google maps? In the given example google seems to be at the sweetspot of cost-use calculation. How will AR change our world? What if we all wear AR glasses in face to face communications one day? Does it lead to more misunderstandings? Will it be uncomfortable or impolite? Sure everyone can see the distractions caused by it. But to continue the thought Daniel added:

“What if AR glasses were mandatory?” Everybody will get scared and spooked, but at the same time say, that this will never happen. Mandatory glasses might break society if we are flooded by ad or information manipulation all day. So, but what if this “mandatory” sneaks in somehow? What if the social pressure gets too high, we see more benefits than draw-backs, FOMO at it’s best. Finally, what happens if the system does not work? Will we be lost? Socially outcast? To dumb to survive? Not having access to phyiscal world items and basic infrastructure? Imagine a real world toaster with digital-only AR-buttons to activate it… refreshing thoughts to avoid Black Mirror (while waiting for real AR consumer glasses).

Speaking about consumer glasses… All-time pro Tish Shute had a great and packed talk about, well, everything. I guess, it’s worthy to wait the youtube video to see all mentioned demos and research work she reviewed and summarized. One example I didn’t know about was the “DynamicLand” project. I always love projected AR and smart environments avoiding glasses and enabling social interaction easily.

Interaction with the world

Talking about interaction with the world… I want to dive deeper into finger tracking, HoloLens 2 plus gestures, etc. in the next post. Today I want to close with two more things I really liked during the AWE in Munich. The crowd-funded Litho AR controller surprised me positively. I saw the videos before where one could use a small finger-attached gadget to control AR apps or your smart phone just by pointing, tapping or sliding. It looked a bit odd. But when I tried it out live, it was very convincing to me. It worked flawlessly an was good fun. I could even place an augmented JENGA tower in space and draw single bars of wood with it. Accuracy was fine and the demo intuitive. If you are tired of air-tapping on your HoloLens or want something to play around with for 200 bucks I’d say this is worth a look. I could imagine this approach as a useful standard once we move to AR glasses. Air-tapping is too power-consuming (cost-usage calculation for lazy humans) and an additional touch screen might be outdated some day (not talking about UX nightmares). Maybe there needs to be some tuning on the hardware – maybe a single ring? Maybe a more flexible size? (I have rather sausage-styled thick fingers….)

So, last today and most fun to watch was the dev track talk from Jyoti Bishnoi and her family: “Rethinking Game Controllers in AR”. This fits to the above. Tapping screens in AR is often annoying. Jyoti coming from an educational background working with kids (also her own as a great beta tester) stressed this part: holding up a device for an AR window and then touching on 3D objcts that somehow lie behind is awkward and sometimes hard. They presented some fresh ideas and claim that we should be the controller and creators should make us move more! Object tracking could be used in AR to make anything an input device (like a hand puppet), facial gestures can be used as control, too. Great fun: open your mouth to become a real vacuum cleaner for virtual dust or items. Use your body pose as controller for a virtual drum set to create a step-by-step recording of a drum pattern. We could make way better use of our spatial world around us – and our own bodies. Maybe sometimes the hardware is cumbersome or lacking the best solution. But even more we have to ask us then: what’s inherent in the medium? We need to solve for the given device, the existing software and especially for the targeted user group. Then we can have a lot of spatial fun.

… and the biggest disappointment during AWE was, that the below glasses were just… regular shades. Damnit! :-)