Objects in the AR glasses may appear closer than they are

We thought that Magic Leap planned to show their glasses yesterday, this time for real! What we’ve learned during their one hour twitch show, I’d like to comment on today briefly.

Measurements distort results

Mirrors and glasses distort reality. Magic Leap finally wanted to use its overdue chance to present their Magic Leap One glasses point of view to a broader public through their twitch channel. Last time we’ve seen only a dead device with a blue LED. Since all of us couldn’t try out the glasses ourselves today watching from the couch, they had to record all through “old school” camera technology and present the resulting view to our flat screens. It’s always annoying showing AR glasses views to a non-wearer. Microsoft also tricks with their outside view and mixed reality capture (having pitch black as a color and a full field of view). Typically we will see high-key marketing videos that don’t reflect reality. What I was wondering before was, how will Magic Leap do the trick? How will they show us their work in progress? Especially with the very limited field of view (even without AR content). These welding / diving goggles layout has a restricted framing already. How would they try to convey us of a great experience in a flatland video show? Did it work? How close are we to a release? Is the hype still worth it?

Today’s Twitch Show

So, what did we see? During the show we first had Graeme Devine, Chief Game Wizard, on board to comment on content development and the community spotlight. They briefly commented on the known issue of limited field of view and how to tackle this. A new sample called “rain” will be available on the developer’s portal to show options for vignette adjustments to have smooth transition and no hard edges. Hm, but that was about it regarding the field of view and their workaround… nothing to be seen here unfortunately.

The community spotlight demos were nothing really worth to discuss. But the three creators, Sadao Tokuyama (a portal demo), Mixspace Technologies (placed annotations) and Adam Roszyk (placing photogrammetry scans inside the emulator as scale models), are not really to blame. For me, this section doesn’t make sense at all right now. Only when people have their own devices at home, it will get useful. The emulator is so cumbersome, I’d rather continue on my HoloLens and emulate some eye blinking trigger events, etc. on my existing device to “test” Magic Leap development. Oh, wait. Why not stay with HoloLens anyway? In Q1 2019 we will see the fresh new version…

On the bright side, what we DID see was a possibly final box design of the shipping package! They claim a late summer delivery to first developers:

Specs, specs, specs

But, joke aside. What did we learn? Alan Kimball, Lead of Dev. Tech Strategy, took us through some additional spec information: the system seems to be running on double ARM64 Tegra X2 CPUs with an extra dedicated Denver Core for math-heavy works with additional nvidia Pascal-based GPU with 256 CUDA cores. OpenGL 4.5 and OpenGL ES 3.1 are fully supported and they recommend to use the also supported Vulkan API to focus on to be future-proof. With the given power he recommends a typical scene size of 200-400.000 triangles as best practice, depending on shader complexity, etc. Ok, so much for the tech talk. Now, live demo!

Dodge this!

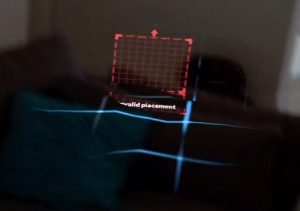

Then we met Colman Bryant from Interaction Lab showing their “Dodge” game, that is supposed to be released on the portal as a magic kit developer lesson. Here, they finally showed some “shot through device” videos. They confirm that it was captured on device with the device’s video camera and an internal compositing. But then again, you didn’t see a cropped FOV, vignette fade-outs. We still don’t know how it feels like on your head. But it seems the recordings are for real, or at least not faking opacity (like in HoloLens captured videos). The compositing actually shows the additive display and the augmented objects as semi-transparent ghosts as expected. The demo showed a miniature stone giant placed on the couch or table throwing rocks at you. The user needs to dodge these. Period. End of demo. The graphics were pretty and it could be fun for 20 seconds, but that’s it. More interesting the small details on design: during start-up, the environment is getting recognized and you need to place the character with a pinch gesture. We couldn’t really see the reconstructed mesh of the real world and thus it’s still unknown how well the ML1 performs. But we could see both, tracking jitter and wrong occlusion with real world objects. Accuracy seems to be no higher than on typical SLAM-using phones or the HoloLens.

From a technical point of view they evaded answering questions again. Finger positions / gestures get recognized, but to what extent exactly and how occlusion might be working we are left alone until now. Within the short clip you could see the user using his or her hand to block the rock shooting forward, but the hand does not get masked. No occlusion whatsoever.

So, this was all very disappointing. But what I did like were some small details, like the reaction of the stone giant when you get very quickly very close to him: he himself blocks you off with his hand. This is a very tiny but fun detail to show the concept of personal space for the virtual character! They talk about invasion of personal space and how to create better experiences using eye tracking, distances between user and virtual objects, etc. To help with this design they promise a toolkit that allows to query about the detected space. Like “find me a good place to sit on” (what we know from Microsoft’s toolkit). Some details I liked when they were talking about design challenges, how to integrate objects into real space, how to create something new that only works in AR: “Good mixed reality design is embracing the real world, not overwriting it.” Especiall multi-user scenarios will be awesome, all seeing the same virtual cat (confirming sociologically I didn’t go crazy myself) or enjoying content together.

But overall, that was all for the day. I’m sure they have a good time and great content ideas, but the pace it is taking is not really promising. Where are the huge big bang demos (like we’ve seen initially in cooperation with WETA going for some big robot attack game)? Where are live demos in the style of mixed reality captures like the competitors do? It still feels vapour and honestly, we’ve seen better. If Microsoft shows up with their new device in 2019 that will probably also be slimmer and possibly even integrate some updated display tech as well: why switch? They already have a very established user base and a planned strategy for a mixed reality cross-device operating system. Before I saw the show today, I thought, this would change today “once I’ve seen it in action”. But, sorry. Naaaah, didn’t. Cycling back to my initial question: how would they try to convey us of a great experience? They didn’t. Again.

Does it appear closer than it all is?

So, not talking about scales and dimensions of augmented objects. But rather thinking about availability! Are the glasses close to release? Or do they still appear closer than they are? Online, Magic Leap recently posted a job offer for a head of distribution… seems like, this step is still building up. They reconfirmed release for 2018 (which is due only 31st of December… still some time) and whatever the late summer date could look like… Rony Abovitz just posted on twitter some more teasers regarding expected information release for the One and the “Next” steps: “Some cool bits coming this week, about ML1 and also some hints at ML Next”… now AT&T is on-board. OK, and then what? It’s still getting ridiculous with their micro news and their teasing of the media circus (including me). Oh, well. We’ll find out eventually…

Stay tuned!