Recognizing & categorizing the world

Hi all,

today I wanted to share another paper published by Microsoft. It’s titled “Interactive 3D Labeling and Learning at your Fingertips” and demonstrates how their system is able to recognize objects on the fly online and how labelling works through voice commands.

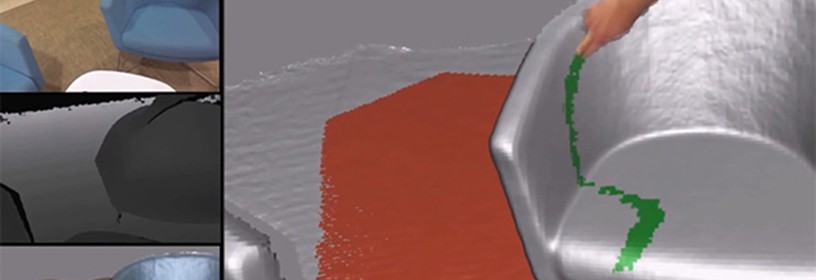

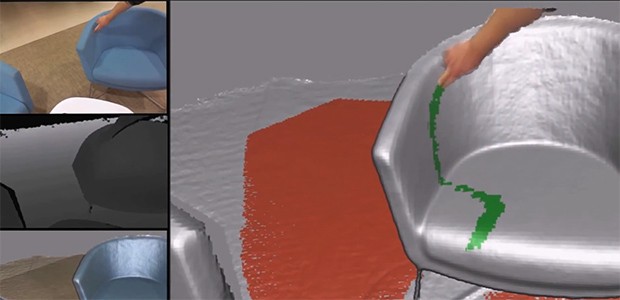

Their demo gives us some coffee tables and chairs with bananas and mugs to scan. You can nicely see how the system creates joint surfaces by “crawling” over the voxels. Their paper goes into details on how their new volumetric inference technique, based on an efficient mean-field approximation, seperates or combines the scanned bits and pieces into valid objects. An interactive user support helps to recognize a new object class (e.g. swiping over the chair). Take a look:

For now still a limited demo with coarse results, but we can clearly see where things are heading! Imagine having all recognized objects synched to the cloud amongst all users. Very soon Microsoft (or others) could create huge databases of everyday objects helping the machine to see and really to semantically understand objects around us! I’m not going to the scary direction now. The cool applications? Let the blind get audio-descriptions of objects and places, use it for independent robot navigation or for new gaming experiences. Here your system could fully understand your environment (not only a depth map of it for occlusions) and integrate it into the gaming virtual space. Be it physics beahviour (to make the virtual pieces more real) or completely new game concepts where you must use the correct real world props to interact with the virtual world! Exciting!