Microsoft Hololens to overtake the Leapers? – Race for the best AR is on!

Magic Leap – the still very mysterious AR company (does it really exist?) from Florida – files new patents and lets us all speculate on what they might be working on… meanwhile Microsoft presents a new AR-goggle titled “Hololens”, that actually exists and lets us experience their demos in reality. (Ah yeah, and by the way they also presented Windows 10…) While Google kills the Glass project (to bring it to production), Microsoft seams to get ahead in the race!

Magic Leap

Of course a patent itself does not at all say, that anything of what is depicted or described will ever actually see the light of day… But it gives some hints on what they dream about or how interaction and hardware could look like. The Verge made an overview of more screenshots from the patent. But we could learn a few things from it: it seems that Magic Leap is also aiming for a similar HMD device like all others and that they also look into gesture control and finger-tip-control to trigger actions. There application range idea goes from AR living rooms, shopping kart extensions with your shopping list, in-store cereal advertisement games or augmented 3D organ live views to virtual recreation holidays… but still it remains in the dark on the current status of their hard- and software.

Microsoft Hololens

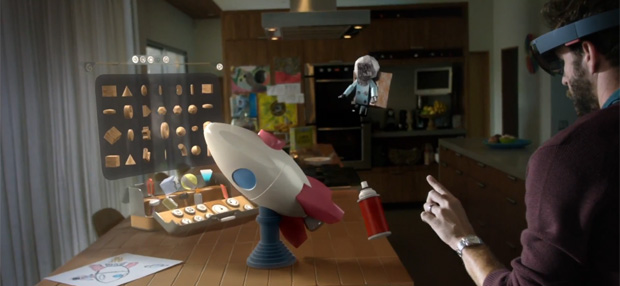

In the mean time, Microsoft steps in and presents – along the way with the Windows 10 introduction – their new gadget and software: Hololens! Let’s enjoy their marketing video before diving into some details and a live demo below:

Of course, we have seen well-made marketing videos before (this one is definitely queuing successfully in!), but below we will see on the real status of things. The name is a bit misleading, since we don’t have real holograms (that float in mid-air and can be seen without any additional devices), but still is a cool term, where the lens explains the “limitation” of looking through a special set of glasses. Microsoft shows first applications of augmented Minecraft (is it still being played? really?), Skype calls while on the go and a virtual Mars visit with “OnSight”. A special content creation software labeled “Holostudio” will be made available along! Sounds good! So, how about reality? Let’s watch:

First to say, a great live demo! Not like in old times, where a blue screen of death popped up when connecting a printer to Windows… The see-through HMD has it’s own integrated high-end CPU, GPU, WiFi hardware and spatial sounds. No cables are needed and no connection to a base-station. Additionally, a 3rd processing unit, the HPU – holographic – gets introduced. It will take care of all the additional tasks that are needed in this immersive setup: understand where you stand and look, your surroundings and interpret your voice and gestures. No markers or external tracking are needed anymore and they jump into a neat live demo of creating a quadcopter by voice, gestures and gaze control.

While the tracking is a tidy bit shaky at the beginning (looking at the post), it seems very stable later on in these controlled conditions. Unfortunately they don’t say a word about how the tracking works or how much setup time is needed. Is the room getting scanned initially and 3D scan-data is stored on the device? … or in the cloud for others to be re-used when entering the same room? Has this already been answered? It would have been nice to see the accuracy of 3D reconstruction and speed. Also, they don’t say anything about the “advanced sensors” that are integrated. I’m pretty sure they ship a miniature version of Kinect-styled depth sensor. Also, they state no thing on outdoor usage: will the same tracking work on the go in large environments? Will it all work without a WiFi connection?

I like the interaction with voice control in mix with gesture and pinch controls to grab, move and glue pieces together. Time to play around and research more on suitable interaction forms! For example, I never like the mid-air finger holding still for 3 seconds to trigger a mid-air button (to start Holostudio here). It just feels cumbersome. But hey, they are just starting and it’s always hard without a tactile feedback…

The briefly mentioned gaze recognition could have a huge impact… if not only used for silly “I look at a button for 3 seconds and it opens facebook”-actions. I want it for smarter interactions, where the CPU or HPU checks the context and understands what I want – to make it easier and faster to interact. Also to visualize more realistically in stereo 3D! I want real depth of field adjusting automatically – so my eyes don’t break when trying to converge in the far out, with my focus on the short-distance screen… Hope Microsoft addresses this very big problem (that all HMD-based AR has) as well! Unfortunately no word at all about renderings, screen specs or stereopsis.

If Microsoft considers all these topics from human visual perception to good interaction ways during their development, I will happily accept that they jump to Windows #10 and skip the 9. Then we really have a new form of how we use computers! Then I happily throw away my mouse and keyboard and enter the new era!