Keynote #2: Terry Peters with bloody Insights

Hi all,

the second great keynote came from Terry Peters on Thursday. He is working in the medical research field and is working for the Imaging Research Laboratories of the Robarts Research Institute in London, Ontario, Canada. He pioneered many of the image-guidance techniques and applications for image-guided neurosurgery and is focusing on progress in this area since 1997 at his lab. He presents his latest work under the title “The Role of Augmented Reality Displays for Guiding Intra-cardiac Interventions”.

Talking about cardiac surgery today he presented the problem surgeons run into: either you open the heart (this lead to bloody pictures as a good start into the day… but the audience seemed stable ;-)) and can see everything – or you try it with minimally invasive techniques where you need extra hardware to get a picture of what you are doing. Be it X-Ray, ultrasound, RGB cameras, etc. Image quality is very poor in the current or old work mode.

On the example of fixing heart valves he showed the newer method of TAVI (transcatheter aortic valve implantation) that gave better results, but still can’t reach a hoped for visual quality. The money spent on TAVI typically went into better valve designs or other technical stuff not regarding the visualization part. TAVI statistics have reached a plateau comparable to regular method’s result. The major problem on not gaining more quality was the lack of investments on imaging and visualization techniques. E.g. intracardiac structures are represented badly (little information for the surgeon) and a very small field of view makes it harder to navigate.

With an augmented guidance system they hope for significantly improved implantation outcomes with reduced complications during the surgery. As a base for this they use the image acquisition method “TEE” that most significantly returned real-time images to work with (although still with low field of view) (hope I got this all right). They use two of these ultrasound images to create two 90° crossed billboard video planes in a virtual representation and add all tracked items into the virtual space, too. I.e. probes and tools are tracked with 6 degrees of freedom by a magnetic tracking system (with tiny trackers smaller than the tip of a pencil). Then additionally a (simplified but sufficient) model of the patient’s organs is created and all information is put into the guidance platform software.

The tricky part is the model-to-patient registration that is solved in multiple steps and verification loops in real-time. Then the overall view on the virtualized combination of all pieces is presented either via a stereo head-mounted display or on a dull screen. Surprisingly the screen won during longer tests, being less distracting.

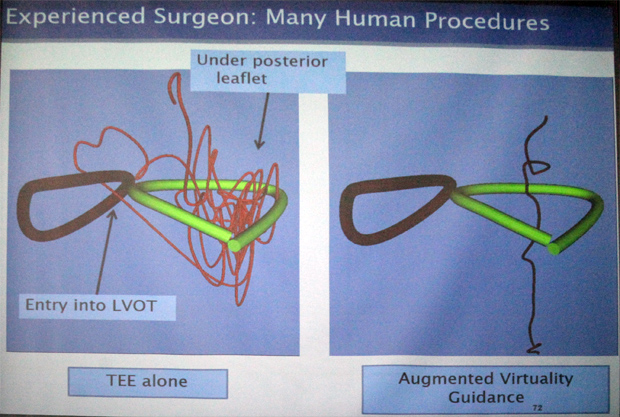

The comparison between the TEE method alone and in combination with the augmented virtual representation showed that the latter has way better placement results even for experienced surgeons (see image: showing the path done by the instrument). This really simplifies the great results: drastically increased accuracy and therefore performance/speed and safety.

The next steps, Mr. Peters says, would be to even get rid off the ultrasound imagery and only work in a virtual representation (bad news for AR there…). With high accuracy it would just be less confusing and better to work with.

Wow. I loved the insight to an entirely different area. Although there was quite some bloody insights involved. It was great to see how CGI/VR/AR and tracking of even moving organs and all tools results in a great step forwards saver and better surgery!

PS. Hey Christoph, maybe you will also blog on the keynote on medicalaugmentedreality? Guess I’m not the one for the medial details! ;-)