Keynote #1: Takeo Kanade doing the Ridiculous

Hi everybody,

let’s start with the first (official main conference) day – Wednesday to kick things off!

After a warm welcome by Gudrun Klinker and Nassir Navab from TU Munich (and being the chairs of the organizing committee) the first keynote started under the title “A new active Augmented Reality that improves how the reality appears to a human” by Takeo Kanade! So, the first talk already started with a great honor for the audience and ISMAR team, having Mr. Kanade present his thoughts on AR and reality. He is one of the major research drivers for robotics and computer vision, working for and founding different Institutes, but probably mostly know for his work at the Carnegie Mellon University: from early robotics to face-recognition in the 70s and autonomous driving he lead a big number of projects and publications in these fields and you can always expect some cutting edge ideas from him!

At ISMAR, he wanted to focus on his project called “Smart Headlight” for cars, but started off with an aquarium demo: it turned out to be an air display, where waterdrops were used as a projection surface for a virtual aquarium. The initial waterdrops’ position (and starting time of dropping) was measured and the following positions during time calculated to project colors on each waterdrop seperately and exclusively! A neat demo that could actually – Mr. Kanade’s idea – scale up to an epic version: imagine that during a baseball game when it starts raining… instead of being annoyed by the rain, the viewers could see the biggest holographics 3D virtual screen of all times… seeing content projected on all rain drops of the stadium! Wow! So, here it is starting to sound megalomaniac. :-)

But how did he get to that idea? It actually started with one piece of the “Smart Headlight” concept: use high speed cameras and sensors to scan rainfall and snow from within your driving car at night to make all drops invisible by not illuminating those selectivelly… at all weather, wind and driving speed. Mr. Kanade: “You might say this is ridiculous.” (Well, yeah, +1!) But turns out they got pretty damn good results and actual built a working system!

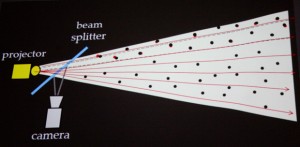

The complete view is scanned in very-tough real-time to detect every single snowflake or rain drop and to forecast its projectory taking into consideration side wind, car speed, etc.! With the known position one can now switch off single pixels in the projector to avoid illuminating the drops. This way, they will not reflect the light into the driver’s direction, resulting in a enhanced vision.

The system uses a modified DLP with 4000 Lumen and 1700 Hz update rate with an overall system reaction speed of 1,5 milliseconds (2013 version)! This is 500 times faster than the average driver could react to anything! During rain the improvement was 70% with 5-10% loss of light and 60% improvement for snow (10-15% light loss). While capturing and image analysis was pretty damn quick, the most annoying time consuming piece was transmitting the data between the components. If this could be sped up, the whole technology could make a big leap forwards! On the complexity of the problem, Mr. Kanade said without really making a joke: if you make it faster, the problem gets easier to solve! Definitely, you can avoid many problems when you have less latency between data aqcuisition, processing and response!

Above, you can see the system with a mounted smart headlight on a car. Mr. Kanade continued with further use cases for the setup. One being an answer for “the glare problem”. Oncoming traffic will blind the oppostive driver’s when keeping the high beams on. Of course, everybody had this situation where one forgot to switch it off while enjoying better view at night… The system can automatically spare the areas where the oncoming driver has his or her eyes or where the traffic in front of one has their rear mirrors, etc. Everybody could always keep on the high beams and gain safety!

He presented further areas of use including enhancements for the road contrast (helping the driver better recognize the lanes) and for recognizing objects: bicycle drivers or pedestrians can be tracked and highlighted (casting light onto them, adjusting brightness or color for those regions, etc.)… the driver/user would get an augmented reality projected into the physical space helping him/her to work and act saver in real space. No display is being used, but rather reality itself – or how it appears to a human – is improved! Yeah, love it.

Being asked what stationary use cases he sees, he showed a demo of jumping ping pong balls being tracked in real-time and being projected onto (leaving the rest of the room in complete darkness). This really is an awesome high-tech version of all the projection mapping we all are trying to do better! A system with 1700 Hz of update rate for projecting onto objects can really create some new fascinating level of entertaining (and useful) projection mapping AR shows!!

While being asked on the future of it, he imagined head-worn, personal projectors (avoiding cumbersome glasses). People could finally remember all the names of their opposites having them projected. ;-)

I really loved the demonstrations and systems presented and am very happy to see so much believe in projecting information into the world and altering it directly. Overwriting reality visible for all, helping the humans with additional information without introducing some new distracting devices! Great stuff!

You definitely need to read on at the smart headlight website of CMU to get all the details! What a start into three awesome ISMAR days!